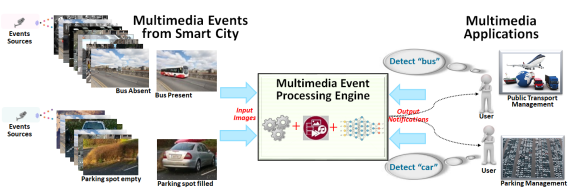

The enormous growth of multimedia content in the Internet of Things (IoT) domain leads to the challenge of processing multimedia streams in real-time; thus, the Internet of Multimedia Things (IoMT) is an emerging concept in the field of smart cities. In the current scenario, we expect that real-time image processing systems are robust in performance, but they are designed for specific domains like traffic management, security, parking, supervision activities, etc. Existing event-based systems are designed to process event streams according to user subscriptions and focused only on structured events like energy consumption events, RFID tag readings, finance, packet loss events, etc. However, Multimedia content occupies a significant share in IoT compared to the scalar data obtained from conventional IoT devices. Due to the lack of support for processing multimedia events in existing event-based systems of IoT, there is the need for an Internet of Multimedia Things (IoMT) based event processing system which can also process images/videos.

Multiple applications within smart cities may require the processing of numerous seen and unseen concepts (unbounded vocabulary) in the form of subscriptions. Deep neural network-based techniques are effective for image recognition, but the limitation of having to train classifiers for unseen concepts may increase the overall response-time for multimedia-based event processing models. These models require a massive amount of annotated training data (i.e., images with bounding boxes). It is not practical to have all trained classifiers or annotated training data available for a large number of unseen classes of smart cities. In this thesis, I address the problem of training classifiers online for unseen concepts to answer user queries that include processing multimedia events in minimum response time and maximum accuracy for the IoMT based systems.

The contributions of this thesis are manifold. First, I analyze the trends, challenges, and opportunities in the state-of-the-art for the IoMT based systems. I propose a generalizable event processing approach to consume IoMT data as a native event type and optimize it for different scenarios consisting of seen, unseen, and partially unseen concepts. The first domain-specific classifier-based model enables the feature extraction in event processing based on subscriptions and optimizes the testing time using an elementary classifier division and selection approach. Next, I propose the hyperparameters based multimedia event detection model to handle completely unseen concepts and optimize the training time for the training from scratch. However, for the partially unseen concepts, I propose a domain adaptation based model that enables knowledge transfer from seen to unseen (like bus → car) concepts and reduces classifiers’ overall response time. The final specific model handles the challenge of collecting a large number of images with bounding box annotations for the training of object detection models on unseen concepts. In this model, I propose a detector (named UnseenNet) to train unseen classes using only image-level labels with no bounding boxes annotation.

I primarily include You Only Look Once (YOLO), Single Shot MultiBox Detector (SSD), and RetinaNet for the object detection while having seen/unseen classes belongs to Pascal VOC, Microsoft COCO, and OpenImages detection datasets. The results indicate that the proposed multimedia event processing models achieve accuracy of 66.34% within 2 hours using classifier division and selection approach, 84.28% within 1 hour using hyperparameter-based optimization, and 95.14% using domain adaptation-based optimization within 30 min of response-time on real-time multimedia events. Lastly, evaluations of domain adaptation based model without bounding boxes demonstrate that UnseenNet outperforms the baseline approaches and reduces the training time of days or >5.5 hours to <5 minutes.

Team

Asra Aslam

Dr Edward Curry

Institution: NUI Galway

Funder

Relevant Publications

| 2021 | |

| [5] | Asra Aslam, Edward Curry, "A Survey on Object Detection for the Internet of Multimedia Things (IoMT) using Deep Learning and Event-based Middleware: Approaches, Challenges, and Future Directions", In Image and Vision Computing, vol. 106, pp. 104095, 2021. [bib] [pdf] [doi] |

| [4] | Asra Aslam, Edward Curry, "Investigating Response Time and Accuracy in Online Classifier Learning for Multimedia Publish-Subscribe Systems", In Multimedia Tools and Applications (MTAP), 2021. [bib] |

| 2020 | |

| [3] | Asra Aslam, Edward Curry, "Reducing Response Time for Multimedia Event Processing using Domain Adaptation", In Proceedings of the 2020 International Conference on Multimedia Retrieval, ACM, New York, NY, USA, pp. 261-265, 2020. [bib] [pdf] [doi] |

| 2018 | |

| [2] | Asra Aslam, Edward Curry, "Towards a Generalized Approach for Deep Neural Network Based Event Processing for the Internet of Multimedia Things", In IEEE Access, vol. 6, pp. 25573-25587, 2018. [bib] [pdf] [doi] |

| 2017 | |

| [1] | Asra Aslam, Souleiman Hasan, Edward Curry, "Challenges with Image Event Processing", In Proceedings of the 11th ACM International Conference on Distributed and Event-based Systems, ACM, New York, NY, USA, pp. 347-348, 2017. [bib] [pdf] [doi] |